Kimi K2: OpenAgentic Intelligence

Kimi K2 is a groundbreaking mixture-of-experts model designed for exceptional performance in frontier knowledge, reasoning, and coding tasks. Built for autonomous action and intelligent problem-solving.

Developed by MoonshotAI, Kimi K2 large model has 1 trillion parameters, adopting a 384-expert mixture architecture that provides excellent performance while maintaining efficient inference. Kimi K2 supports 128K context length and achieves industry-leading levels in programming, mathematical reasoning, knowledge Q&A, and other domains.

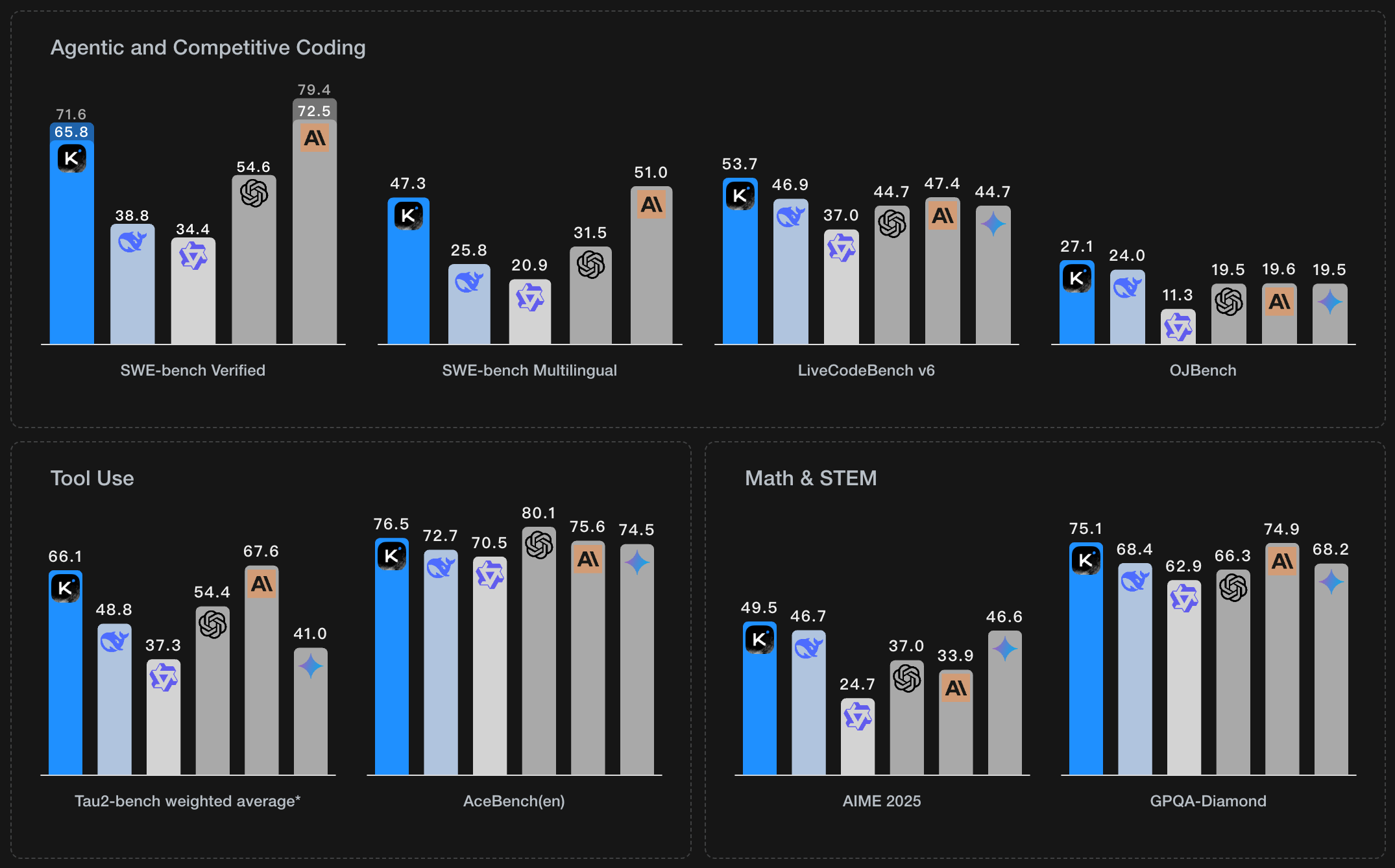

Leading Performance Across Benchmarks

Kimi K2 achieves state-of-the-art results compared to other leading models across various evaluation benchmarks

Agentic Capabilities

Autonomous problem-solving with tool interaction

High Performance

State-of-the-art reasoning and coding

Mixture-of-Experts

384 experts with 32B activated params

Key Features of Kimi K2

Discover the innovations that make Kimi K2 exceptional at frontier knowledge, reasoning, and coding tasks.

What is Kimi K2?

Kimi K2 is a groundbreaking mixture-of-experts model that achieves state-of-the-art performance in knowledge, reasoning, math, and coding benchmarks while maintaining efficiency through intelligent routing.

About Kimi K2 Large Language Model

Kimi K2 is an open-source large language model developed by MoonshotAI (Dark Side of the Moon), adopting a Mixture of Experts (MoE) architecture. As a new generation of agentic AI, Kimi K2 has a total parameter scale of 1 trillion, including 384 expert models, with 32 billion parameters activated per inference.

The Kimi K2 model performs excellently in multiple benchmark tests, particularly achieving industry-leading levels in programming, mathematical reasoning, knowledge Q&A and other fields. The model supports a super-long context window of 128K tokens, capable of handling long documents and complex dialogue scenarios.

Unlike traditional large language models, Kimi K2 has powerful tool calling and agent capabilities, capable of autonomously executing complex tasks and interacting with external tools and APIs to achieve true autonomous problem solving.

Technical Features

- • 1 trillion parameter mixture-of-experts architecture

- • 384 expert models with intelligent routing

- • 128K super-long context support

- • 15.5 trillion token training data

- • Open source commercial license

Application Scenarios

- • Code generation and debugging

- • Mathematical reasoning and computation

- • Long document analysis

- • Intelligent agent application development

- • Multilingual dialogue interaction

Kimi K2 Users Say

Early adopters share their experience with Kimi K2's advanced agentic intelligence capabilities.

"Kimi K2's agentic capabilities are impressive. The model demonstrates sophisticated reasoning and can autonomously solve complex problems that require multiple steps."

"The coding performance is outstanding. Kimi K2 understands context better than any model I've tested and generates production-ready code consistently."

"The mixture-of-experts architecture is brilliantly implemented. It achieves remarkable efficiency while maintaining state-of-the-art performance across benchmarks."

"Kimi K2's tool integration capabilities are game-changing. The model can seamlessly interact with external APIs and perform autonomous actions."

"The 128K context length enables handling of complex, long-form reasoning tasks that were previously impossible. This is a significant advancement."

"Developing Kimi K2 has been an incredible journey. We're proud to contribute this breakthrough model to the open-source community."

"Kimi K2 represents a significant leap forward in agentic AI. The model's ability to autonomously reason through complex problems and integrate with external tools makes it incredibly powerful for real-world applications. This is the future of AI assistance."

Frequently Asked Questions

Get answers to common questions about Kimi K2's capabilities, technical specifications, and usage.

Need technical support?

Access documentation, community support, and technical resources for Kimi K2.

Open source model available at: HuggingFace • GitHub • API Documentation